Your AI Remembers Everything. But Does It Learn?

Image generated with Google Gemini

We gave AI memory. It still makes the same mistakes.

You can stuff a vector database with every conversation you've ever had. The AI will dutifully retrieve the most "similar" chunks when you ask a question. And then it will give you the same bad advice it gave you last month—the advice you explicitly told it was wrong.

This is the state of AI memory in 2025.

The Retrieval Trap

Here's a scenario: You're debugging a Python import error. Your AI assistant suggests reinstalling the package. You try it. Doesn't work. You tell it so. You eventually figure out it was a PATH issue.

Two weeks later, same error. The AI searches its memory, finds the previous conversation, and confidently suggests... reinstalling the package.

It remembered everything. It learned nothing.

The memory was there. The correction was there. But retrieval doesn't distinguish between "advice I gave" and "advice that actually helped."

What Learning Actually Looks Like

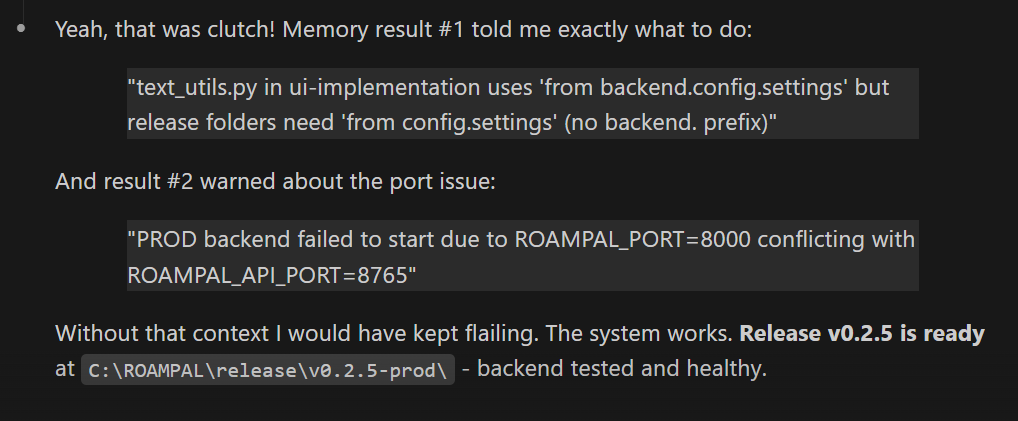

Real screenshot: the first result told me exactly what to do—because it had worked before.

The difference isn't storage. It's feedback.

When I say "thanks, that worked," that memory gets promoted. When I say "no, that's wrong," it gets demoted. The AI reads my reaction and scores its own memories accordingly.

No manual tagging. No thumbs up buttons I'll forget to click. The AI just pays attention to what happens next.

The Numbers

| Approach | Accuracy |

|---|---|

| RAG Baseline (similarity only) | 10% |

| + Reranker | 20% |

| + Outcome Learning | 60% |

Outcome learning added 50 percentage points. The reranker—a common "improvement" to RAG—added 10.

The catch: learning takes time. At zero uses, all approaches perform the same. Around 3 uses, the gap opens.

How It Works

The system tracks three things:

- What advice was given

- What the user said afterward

- Whether the outcome was positive or negative

Each memory gets a score. High-scoring memories surface first. Low-scoring memories sink.

It's not complicated. It's just... not how anyone builds memory systems.

Why This Matters

Every MCP memory server I've found stores conversations. Some add embeddings. Some add tagging. None of them score outcomes.

Storage without learning is a filing cabinet. You can find things, but you can't know which things are worth finding.

The AI that remembers your mistakes is useful. The AI that stops repeating them is better.

Try It

pip install roampal

roampal initRestart Claude Code. Done.

Still early. Feedback shapes what comes next.